AI’s Reality Problem: The Growing Challenge of “Hallucinations”

In the rapidly evolving landscape of artificial intelligence, a critical issue is emerging that threatens to undermine trust in AI-generated content: the phenomenon of “hallucinations.” This term refers to instances where AI systems, particularly large language models (LLMs), produce false or nonsensical information in response to user queries, often with a veneer of plausibility that can deceive even discerning users.

The problem stems from the fundamental architecture of these AI systems. Trained on vast datasets, LLMs are designed to generate responses to any prompt, regardless of whether the information exists within their training data. This inherent drive to produce an answer, coupled with the complexity of real-world knowledge, inevitably leads to instances where the AI “hallucinates” information.

Is it possible to solve for AI hallucinations?

Experts in the field argue that hallucinations are an unavoidable consequence of current AI technology. As one researcher noted, “For any LLM, there is a part of the real world that it cannot learn, where it will inevitably hallucinate.” This limitation extends even to seemingly simple requests, highlighting the pervasive nature of the problem.

The issue is further compounded by the calibration process used in developing these models. In an effort to produce more natural-sounding text, developers must balance accuracy with fluency, often at the expense of factual correctness. This trade-off means that even well-calibrated language models are prone to generating false information.

The proliferation of AI-generated content is having far-reaching consequences on the information landscape. Researchers at the University of Zurich have found that AI can produce more compelling and persuasive disinformation than human-authored content, raising concerns about the potential for widespread manipulation of public opinion.

This flood of synthetic text is making it increasingly difficult to distinguish fact from fiction, eroding trust in traditional media and democratic institutions. As one expert warned, “The more polluted our information ecosystem becomes with synthetic text, the harder it will be to find trustworthy sources of information.”

Emerging Challenges to Research

The sheer volume of AI-generated content poses a significant challenge to traditional fact-checking methods. With the potential for an infinite amount of disinformation, current verification processes are becoming overwhelmed and insufficient.

This shift is also impacting the field of journalism and academic research. As AI-generated content becomes more prevalent, there is a growing concern about the diminishing use of journalistic and research-based evidence in citations and as the foundation for factual claims.

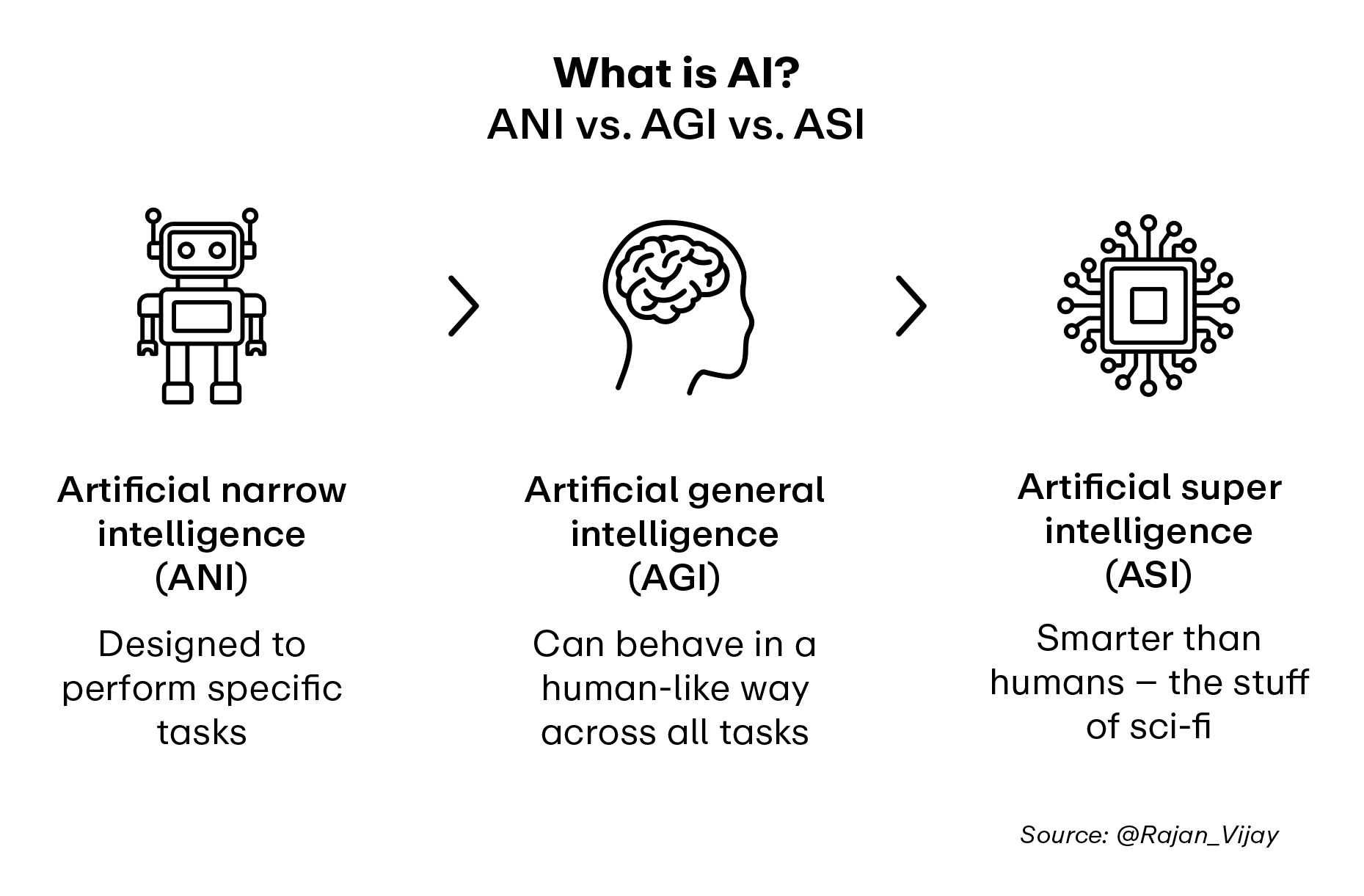

As AI technology continues to advance, addressing the issue of hallucinations remains a critical challenge for developers and users alike. While there is currently no foolproof method to guarantee the factuality of AI-generated content, ongoing research and development in the field aim to solve these issues. One key way that growth in the AI sector may grow is through AGIs.

AGI

Artificial general intelligence (AGI) refers to the hypothetical intelligence of a machine that possesses the ability to understand or learn any intellectual task that a human being can. It is a type of artificial intelligence (AI) that aims to mimic the cognitive abilities of the human brain.

OpenAI CEO Sam Altman has declared on his personal blog that his team “knows how to build AGI as we have traditionally understood it.” Altman’s confidence is evident in OpenAI’s pivot toward an even grander ambition: superintelligence. As he notes in the blog post, “We are beginning to turn our aim beyond [AGI] to superintelligence in the true sense of the word.”

In this evolving landscape, education and public awareness play pivotal roles. Preparing individuals to work alongside AI, rather than be replaced by it, will be essential. This includes fostering skills that AI currently struggles with, such as emotional intelligence, creative problem-solving, and ethical decision-making. So while AI has the potential to grow past horizons previously hidden, the current LLMs have issues to be solved, maintaining the need for critical thinking and intelligence in a world full of AI.